👋 Hello, I'm U-Zyn Chua

I'm a software engineer. I build, research and write about technology, artificial intelligence, and the open web. I also mentor startups and teach AI engineering.

Learn more about me or explore my thoughts through my articles.

Recent Posts

Bambu RFID scanner on Flipper Zero

January 17, 2026

3D printing is fun. One common situation for anyone who owns a 3D printer is that you eventually end up with a growing stack of filaments at home.

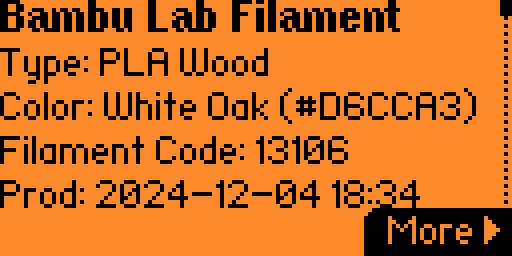

These spools come in many combinations of colors and materials, and sooner or later you'll need to top up a spool that's running low. The problem is that Bambu filament labels only show the material and variant—such as PLA Basic, PLA Wood, PLA Matte, or ABS—but not the color code or color name. This makes it surprisingly tedious to figure out which exact filament to reorder. You either dig through old purchase receipts or manually try to match colors on the website.

If you have a Flipper Zero with the flipper-bambu plugin installed, you can now easily scan a Bambu filament spool via its RFID tag to retrieve the color code, along with other useful details such as temperature configurations, specifications, and production date—information that isn't normally visible.