Watch the OpenAI GPT 4.5 announcement video.

Messages from the announcement, some obvious, some not so:

-

GPT 4.5 is going to be awesome.

-

We got a glimpse of GPT 1 (08:34), circa-2018. Spoiler alert: it sucked.

-

The engineering team that worked at OpenAI is just as awkward in social settings as your company's engineering team.

-

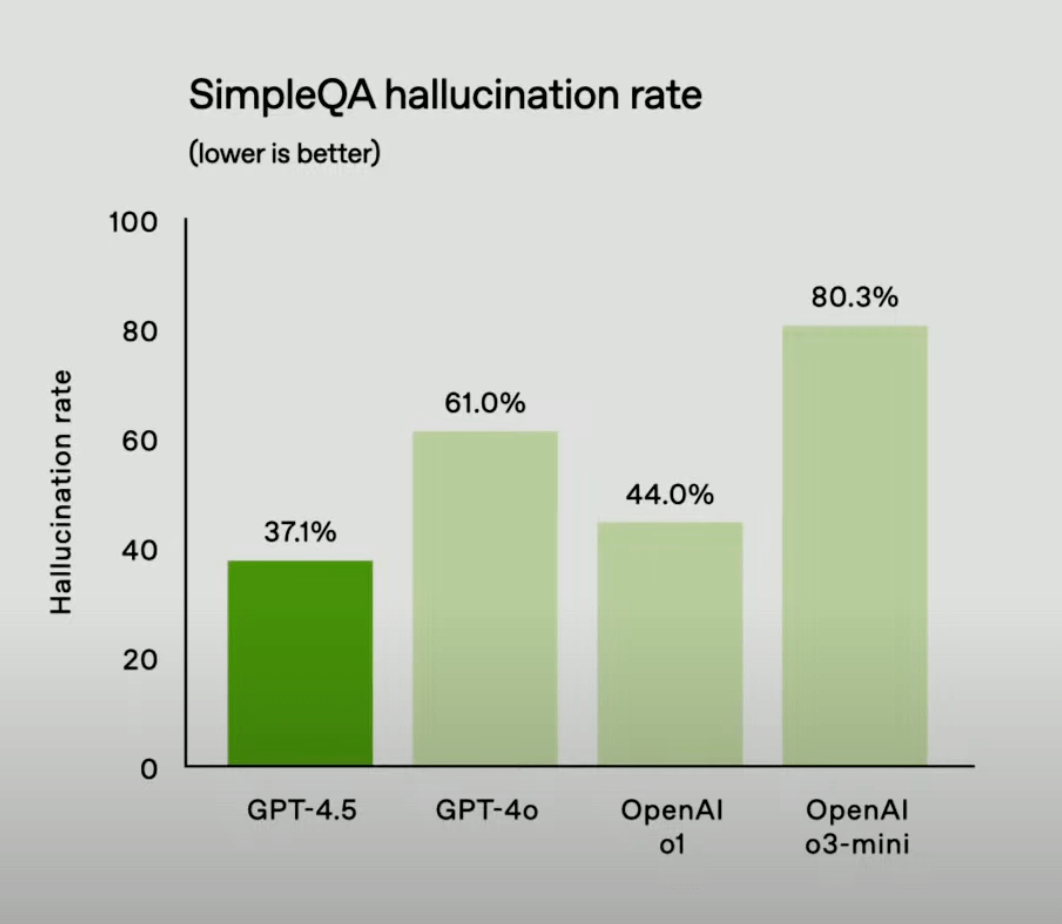

o3-mini has 80% hallucination rate! That's shocking! That explains why I have not had good experience with o3-mini.

-

Key hidden message: GPT 4.5 is trained aggressively at low precision (07:40), a technique popularized recently by DeepSeek.

Low-precision training

This could normalize the way how models are trained in the future. I'm not sure yet if this is good or bad in the long term because we may not know what we might miss at high precision with improved techniques and compute resources if we continue to train at low precision due to the pressure from competitors.

Before DeepSeek, training was often done at high precision, requiring more resources. Precision was usually reduced (quantized) only at testing (interference or usage) time to reduce compute requirements for everyday use.

It is also important to note that while DeepSeek demonstrated how effective low-precision training can yield effective results, DeepSeek's team did not invent low-precision training.